Apple iPhone’s Voice-to-Text Feature Causes Stir with Controversial Autocorrect

Voice Recognition Glitch Raises Eyebrows

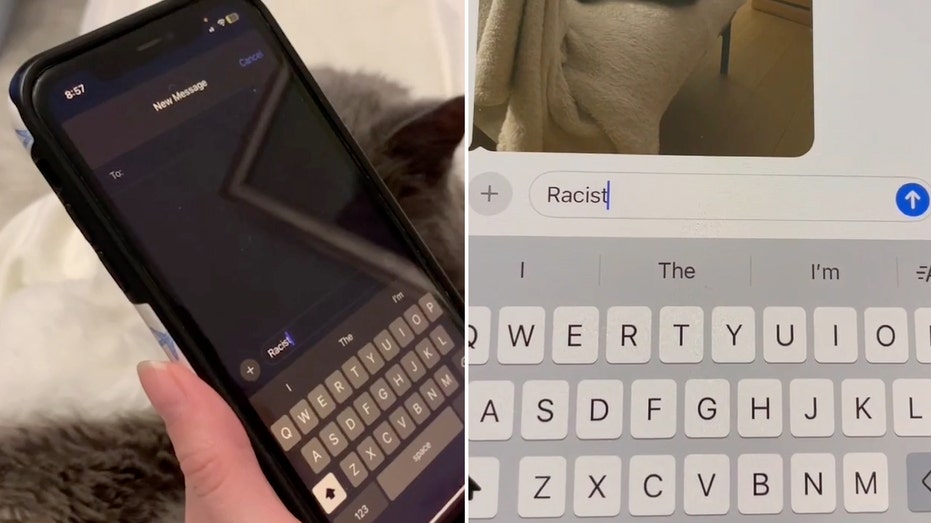

Apple’s iPhone has found itself at the center of a heated debate after a TikTok video went viral, showcasing a peculiar glitch in the voice-to-text feature. The clip featured a user dictating the word “racist,” which momentarily appeared as “Trump” before reverting back to the intended term. This unexpected result has ignited discussions about the reliability of speech recognition technology in smartphones.

Replicating the Controversy

Fox News Digital took it upon themselves to investigate the issue, successfully reproducing the phenomenon multiple times. Users reported that when they said “racist,” the voice-to-text function occasionally flashed “Trump” before quickly correcting itself to “racist.” Interestingly, this glitch did not occur with every attempt; in fact, the feature sometimes misinterpreted the word as “reinhold” or “you” instead. However, the majority of the time, it accurately transcribed the word “racist.”

Apple Responds to the Issue

In light of the growing controversy, an Apple spokesperson announced that the company is aware of the problem and is actively working on a resolution. They explained, “We are aware of an issue with the speech recognition model that powers Dictation, and we are rolling out a fix as soon as possible.” The company clarified that the glitch is a result of phonetic overlap, where the speech recognition models may briefly display incorrect words before identifying the correct one. This bug appears to affect other words containing the letter “r” as well.

Historical Context of Technology and Political Bias

This incident is not the first time technology has faced scrutiny over perceived political bias. In September, a viral video showcased Amazon’s Alexa providing reasons to vote for then-Vice President Kamala Harris while refusing to do the same for Donald Trump. Following the uproar, representatives from Amazon briefed House Judiciary Committee staffers about the incident, explaining that Alexa utilizes pre-programmed manual overrides to respond to specific user prompts.

Addressing Alexa’s Political Responses

During the briefing, it was revealed that Alexa had been programmed to withhold content promoting particular political parties or candidates, including Trump and President Joe Biden. However, Harris had not been included in the manual overrides due to a lack of user inquiries about her candidacy. After the video gained traction, Amazon quickly implemented a manual override for questions regarding Harris, with a prompt response time of just two hours.

Amazon’s Commitment to Neutrality

At the briefing, Amazon issued an apology for any perceived bias exhibited by Alexa. They emphasized their commitment to preventing political opinions from influencing the assistant’s responses. The tech giant has since audited its systems and ensured manual overrides are in place for all candidates and various election-related prompts, moving beyond the previous focus on presidential candidates alone.

Conclusion

As technology continues to evolve, the need for unbiased and accurate speech recognition systems becomes increasingly critical. The recent glitches in both Apple’s and Amazon’s voice recognition technologies serve as reminders of the challenges faced in creating impartial digital assistants. Moving forward, both companies must strive to enhance their systems to prevent similar controversies from arising in the future.